ElasticSearch

简介

- 基于 Apache Lucene 开源的分布式、高扩展、近实时的搜索引擎,主要用于海量数据快速存储,实时检索,高效分析的场景。通过简单易用的 RESTful API,隐藏 Lucene 的复杂性,让全文搜索变得简单。

- 将全文检索、数据分析以及分布式技术结合在一起,形成了独一无二的 ES;

- 提供数据库没有的功能 全文检索,同义词处理,相关度排名,复杂数据分析,海量数据的近实时处理

- 底层基于 Lucene 开发,针对 Lucene 的局限性,ES 提供了 RESTful API 风格的接口、支持分布式、可水平扩展,同时它可以被多种编程语言调用

- 除了进行全文检索,也支持聚合/排序。可以把 ES 当作搜索引擎来使用

安装

创建用户

ES不能使用root用户启动,创建一个用户

groupadd elasticsearch

useradd es_zwq

passwd es_zwq (zwqzwqzwq)

usermod -G elasticsearch es_zwq

visudo // 给sudo权限

es_zwq ALL=(ALL) ALL // 添加解压安装

mkdir /usr/local/es

tar -zxvf elasticsearch-7.6.1-linux-x86_64.tar.gz

chown -R es_zwq elasticsearch-7.6.1

su es_zwq // 切换用户配置

cd elasticsearch-7.6.1

mkdir log

mkdir data

cd config

vim elasticsearch.yml

cluster.name: my-application // 集群名称

node.name: node-1 // 这个节点

network.host: 0.0.0.0 // 任何IP都能访问

http.port: 9200

discovery.seed_hosts: ["192.168.31.8"]

cluster.initial_master_nodes: ["node-1"]

// 以下新增

bootstrap.system_call_filter: false // 关闭系统调用过滤器检查

bootstrap.memory_lock: false // 是否锁住内存,避免交换(swapped)带来的性能损失,默认值是: false

http.cors.enabled: true // 允许ElasticSearch跨域

http.cors.allow-origin: "*" // 允许所有域名跨域

vim jvm.options

-Xms1g

-Xmx1g启动异常

max file descriptors[4096] for elasticsearch process likely too low…

ES需要大量创建索引文件,需要解除linux打开文件最大数目限制sudo vi /etc/security/limits.conf * soft nofile 65536 * hard nofile 65536 重新登录用户生效max number of threads[1024] for user [es] likely too low

修改普通用户可以创建最大线程数centos6 sudo vi /etc/security/limits.d/90-nproc.conf centos7 sudo vi /etc/security/limits.d/20-nproc.conf * soft nproc 1024 // 改为 * soft nproc 4096max virtual memory areas vm.max_map_count[65530] likely too low.

调大系统的虚拟内存vi /etc/sysctl.conf // 追加 vm.max_map_count=655360执行sysctl -p

重新连接

启动

cd bin

./elasticsearch -d // 后台启动安装Kibana

解压配置

tar -zxvf kibana.tar.gz

cd .../config

vim kibana.yml

server.port: 5601

server.host: "192.168.31.8"

elasticsearch.hosts: ["http://192.168.31.8:9200"]启动

同样不能root启动,给权限

chown -R es_zwq kibana-7.6.1-linux-x86_64cd bin

nohup ./kibana &访问192.168.31.8:5601

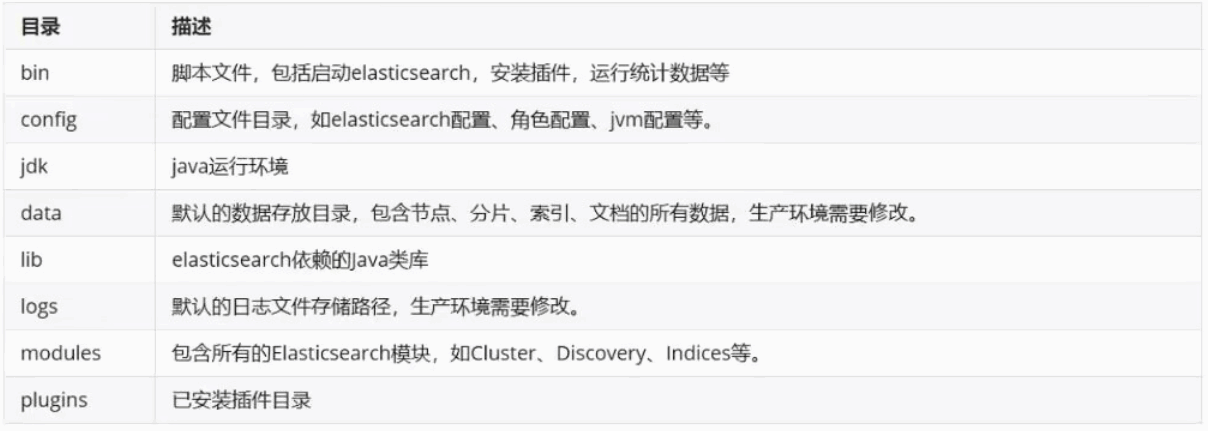

文件目录

分词插件

bin/elasticsearch-plugin list

bin/elasticsearch-plugin install analysis-icu

bin/elasticsearch-plugin remove analysis-icuPOST _analyze

{

"analyzer": "standard",

"text": "中华民族"

}ik分词器:https://github.com/medcl/elasticsearch-analysis-ik/releases

下载解压到plugins,解压后zip删掉

重启服务生效

粗粒度拆分

POST _analyze

{

"analyzer": "ik_smart",

"text": "中华民族"

}

细粒度拆分

POST _analyze

{

"analyzer": "ik_max_word",

"text": "中华民族"

}使用

创建

指定ik分词,index: false(默认true,false表示这个字段不进行索引,不记录),null_value:可以查询null值

copy_to设置:将字段数值拷贝到目标字段,满足一些特定搜索需求,copy_to字段不出现在_source

index_options:控制倒排索引记录的内容

- docs:记录docid

- freqs:记录docid和词频

- positions:记录docid,词频,pisition位置

- offsets:记录docid,词频,position,字符offset偏移量

text类型默认pisitions,其它默认docsPUT /test_db { "settings":{ "index":{ "analysis.analyzer.default.type":"ik_max_word" } }, "mappings":{ "properties": { "id": {"type": "long", "index": false, "index_options": "offsets"}, "title": {"type": "text"}, "content": {"type": "text", "null_value": "NULL"}, "province": {"type": "keyword", "copy_to": "full_address"}, "city": {"type": "text", "copy_to": "full_address"} } } }

修改mapping字段

- 新增字段,根据dynamic类型不同

- dynamic:true,新字段写入,mapping会更新

- dynamic:false,新字段写入,mapping不会更新,但数据会写入

- dynamic:strict,新字段写入,会失败

这个可以修改PUT /test_db/_mapping { "dynamic": true }

- 已有字段,修改数据类型,不支持修改字段定义,一旦生成不能修改

如果需要改变字段类型,需要重建索引reindex api// 创建索引和mapping PUT /test2_db { "settings":{ "index":{ "analysis.analyzer.default.type":"ik_max_word" } }, "mappings":{ "properties": { "id": {"type": "long", "index": false, "index_options": "offsets"}, "title": {"type": "text"}, "content": {"type": "text"} } } } // 重建索引 POST _reindex { "source": { "index": "test_db" }, "dest": { "index": "test2_db" } } // 删掉旧的 DELETE /test_db // 取别名 PUT /test2_db/_alias/test_db

查询

GET /article/_doc/1

GET /article/_doc/_search?q=id:11

GET /article/_doc/_search?q=id[11 TO 22]

GET /article/_doc/_search?q=id:<=22

GET /article/_doc/_mget

{

"ids":[1,2]

}

GET _mget

{

"docs":[

{"_index": "test_db","_id":1}

{"_index": "article","_id":2}

]

}

分页查询 from=*&size=*

GET /article/_doc/_search?q=age:20&from=0&size=2

对结果只输出某些字段

GET /article/_doc/_search?_source=name,desc

对结果进行排序

GET /article/_doc/_search?sort=age:desc

POST /_msearch

{"index":"article"}

{"query":{"match":{"content":"python"}}}

{"index":"test_de"}

{"query":{"match":{"name":"java"}}}删除

DELETE /test_db/_doc/1POST /article/_delete_by_query

{

"query": {

"match_all": {}

}

}添加修改

// put post都能创建或修改,put需指定id

PUT /test_db/_doc/1

{

"name":"sumengnan",

"content":"我爱你中国",

"order":25

}

// POST自动生成ID

POST /test_db/_doc

{

"name":"张三",

"content":"中华人民共和国",

"order":20

}

POST /test_db/_doc/2?if_seq_no=3&if_primary_term=1

// 局部更新

POST /test_db/_update/1

{

"doc":{

"order": 28

}

}批量操作

// 批量创建

POST _bulk

{"create":{"_index":"article", "_type":"_doc", "_id":3}}

{"id":3,"title":"标题","content":"内容","tags":["java", "ob"],"create_time":1234123123}

{"create":{"_index":"article", "_type":"_doc", "_id":4}}

{"id":4,"title":"标题2","content":"内容2","tags":["python", "ob"],"create_time":12341212123}

// 批量创建或替换

POST _bulk

{"index":{"_index":"article", "_type":"_doc", "_id":3}}

{"id":3,"title":"标题a","content":"内容a","tags":["java", "ob"],"create_time":1234123123}

{"index":{"_index":"article", "_type":"_doc", "_id":4}}

{"id":4,"title":"标题b","content":"内容b","tags":["python", "ob"],"create_time":12341212123}

// 批量删除

POST _bulk

{"delete":{"_index":"article","_type":"_doc","_id":3}}

{"delete":{"_index":"article","_type":"_doc","_id":4}}

// 批量修改

POST _bulk

{"update":{"_index":"article","_type":"_doc","_id":3}}

{"doc":{"title":"java base"}}

{"update":{"_index":"article","_type":"_doc","_id":4}}

{"doc":{"title":"java test"}}

// 综合

POST _bulk

{"delete":{"_index":"article","_type":"_doc","_id":3}}

{"create":{"_index":"article", "_type":"_doc", "_id":3}}

{"id":3,"title":"标题","content":"内容","tags":["java", "ob"],"create_time":1234123123}

{"update":{"_index":"article","_type":"_doc","_id":3}}

{"doc":{"title":"java base"}}DSL查询

高级查询,用body进行交互

// match_all 默认返回10条数据

GET /test_db/_search

{

"query":{

"match_all":{}

},

"from":0,

"size":5

}// 深度查询方案 -> 分页查询scroll:查询后返回游标ID值:_scroll_id

// scroll=1m,采用游标查询,保持游标查询窗口一分钟

GET /test_db/_search?scroll=1m

{

"query":{"match_all":{}},

"size":2

}

// 会返回游标ID,然后用游标查询

GET /_search/scroll

{

"scroll":"1m",

"scroll_id":"DGASDFASDASDFASDGFASDGASDFASDF"

}// 指定字段排序,排序会让score失效,source返回指定字段

GET /test_db/_search

{

"query":{

"match_all":{}

},

"from":0,

"size":5,

"sort":[{"order":"desc"}],

"_source":["name","content"]

}// match:根据关键字分词,query:指定匹配值,operator:匹配条件类型(and,or),minimum_should_match:最低匹配度,至少匹配几个关键词

GET /article/_search

{

"query":{

"match":{

"content":{"query": "跨越无法","operator":"and","minimum_should_match":0}

}

}

}// match_phrase:匹配分词都要包含,并且需要连续,可配置slop参数:表示匹配分词之间最多间隔几个分词

GET /article/_search

{

"query":{

"match_phrase":{

"content":{"query":"无法跨越","slop":2}

}

}

}// 多字段查询multi_match

GET /article/_search

{

"query":{

"multi_match":{

"query":"无法跨越",

"operator": "and",

"fields":["content","title"]

}

}

}// query_string

允许在单个查询字符串中指定AND | OR | NOT条件

GET /article/_search

{

"query":{

"query_string":{

"query":"无法 OR 跨越"

}

}

}

// 指定字段

GET /article/_search

{

"query":{

"query_string":{

"default_field":"content",

"query":"无法 OR 跨越"

}

}

}

// 多个字段

GET /article/_search

{

"query":{

"query_string":{

"fields":["title","content"],

"query":"无法 OR (自动 AND 装配)"

}

}

}// simple_query_string 会忽略错误的语法

GET /article/_search

{

"query":{

"simple_query_string":{

"fields":["title","content"],

"query":"无法 + 装配\"",

"default_operator":"AND"

}

}

}// 关键词查询term

GET /article/_search

{

"query":{

"term":{

"title.keyword":{"value":"java"}

}

}

}

// 避免算分,提高性能

GET /article/_search

{

"query":{

"constant_score":{

"filter":{

"term":{

"title.keyword":{"value":"java"}

}

}

}

}

}

// bool,日期,数字,可以用term精确匹配

GET /article/_search

{

"query":{

"term":{

"id":{"value":2}

}

}

}// prefix:词条以指定的value为前缀的:对分词后的词进行前缀搜索

GET /article/_search

{

"query":{

"prefix":{

"content":{"value":"java"}

}

}

}// wildcard 查询,不是比较开头,支持复杂匹配模式

GET /article/_search

{

"query":{

"wildcard":{

"content":{"value":"java*"}

}

}

}

// keywork,不受分词影响

GET /article/_search

{

"query":{

"wildcard":{

"content.keyword":{"value":"无法*"}

}

}

}// range范围查询

GET /article/_search

{

"query":{

"range":{

"id":{"gte":2,"lte":5}

}

}

}

// 日期

PUT /zzz_db

{

"settings": {

"index":{

"analysis.analyzer.default.type":"ik_max_word"

}

}

}

POST _bulk

{"create":{"_index":"zzz_db", "_type":"_doc","_id":1}}

{"id":1,"title":"标题1","content":"冯诺依曼也会博弈论","tags":["java1", "ob"],"create_time":"2023-01-03"}

{"create":{"_index":"zzz_db", "_type":"_doc", "_id":2}}

{"id":2,"title":"标题1","content":"8岁我就会微积分","tags":["python1", "ob"],"create_time":"2011-04-03"}

GET /zzz_db/_search

{

"query":{

"range":{

"create_time":{"gte":"now-2y"}

}

}

}// 模糊查询fuzzy:有时候打错字,这个能模糊查询到fuzziness:最大模糊数(0-2),prefix_length:内容开头n个字符必须完全匹配

GET /article/_search

{

"query":{

"fuzzy":{

"content":{"value":"如门","fuzziness":1,"prefix_length":0}

}

}

}// 高亮查询highlight:让关键词高亮

// pre_tags前缀标签 post_tags后缀标签 tags_schema设置为styled可以使用内置高亮样式 require_field_match多字段高亮需要设置false

GET /article/_search

{

"query":{

"term":{

"content":{"value":"java"}

}

},

"highlight":{

"pre_tags":["<span style='color:red'>"],

"post_tags":["</span>"],

"fields":{

"*":{}

}

}

}

// 多字段高亮

GET /article/_search

{

"query":{

"term":{

"content":{"value":"java"}

}

},

"highlight":{

"pre_tags":["<span style='color:red'>"],

"post_tags":["</span>"],

"require_field_match":"false",

"fields":{

"tags":{},

"content":{}

}

}

}相关性

GET /article/_search

{

"query":{

"boosting":{

"positive":{

"term": {"content":"无法"}

},

"negative":{

"term": {"content":"实现"}

},

"negative_boost": 0.2

}

}

}布尔查询

- must: &&必须匹配,贡献算分

- should: ||选择性匹配,贡献算分

- must_not: !,不贡献算分

- filter:必须匹配,不贡献算分

GET /article/_search { "query":{ "bool": { "must":[ { "match": {"title":"标题1"} } ] } } } GET /article/_search { "query":{ "bool": { "must":[ { "match": {"title":"标题1"} } ], "should":[ { "match": {"title":"标题1"} }, { "match": {"content": "java"} } ], "minimum_should_match": 1 } } }

springboot

依赖配置

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-elasticsearch</artifactId>

</dependency>spring:

data:

elasticsearch:

rest:

# 如果是集群,用逗号隔开

uris: 192.168.31.8:9200

#username: zzz

#password: zzz使用测试

@Data

@NoArgsConstructor

@AllArgsConstructor

@Document(indexName = "user")

public class UserDO implements Serializable {

private static final long serialVersionUID = -4977746738310054290L;

@Id

private Integer id;

private String name;

@Field(type = FieldType.Integer)

private Integer age;

@Field(index = true, store = true, type = FieldType.Text, analyzer = "ik_smart")

//Text可以分词 ik_smart=粗粒度分词 ik_max_word 为细粒度分词

private String description;

private String address;

@Field(type = FieldType.Date)

private Date createTime;

@Field(type = FieldType.Date)

private Date updateTime;

}测试类

@RunWith(SpringRunner.class)

@SpringBootTest

class ElasticsearchDemoApplicationTests {

@Autowired

private ElasticsearchRestTemplate elasticsearchRestTemplate;

@Test

void insert() {

// 新增或修改

UserDO user = new UserDO(1, "张三", 24, "程序员", "重庆观音桥", new Date(), new Date());

elasticsearchRestTemplate.save(user);

}

@Test

void test() {

// 新增或修改

List<UserDO> users = new ArrayList<>();

users.add(new UserDO(1, "张三", 24, "程序员", "重庆观音桥", new Date(), new Date()));

users.add(new UserDO(2, "李四", 24, "程序员2", "重庆", new Date(), new Date()));

users.add(new UserDO(3, "王五", 24, "程序员3", "重庆南岸", new Date(), new Date()));

users.add(new UserDO(4, "张三丰", 24, "打工仔", "重庆渝北新牌坊", new Date(), new Date()));

users.add(new UserDO(5, "李四迁", 24, "运动员", "重庆渝中区上清寺", new Date(), new Date()));

users.add(new UserDO(6, "王五六", 24, "军人", "重庆市区", new Date(), new Date()));

elasticsearchRestTemplate.save(users);

}

@Test

void testt() {

// 修改单条记录部分数据

Document document = Document.create();

document.put("address", "北京天安门");

UpdateQuery updateQuery = UpdateQuery.builder("4")

.withDocument(document)

.build();

elasticsearchRestTemplate.update(updateQuery, IndexCoordinates.of("user"));

// 修改多条记录部分数据

List<UpdateQuery> updateQueryList = new ArrayList<>();

for (int i = 1; i <= 5; i++) {

Document document2 = Document.create();

document2.put("age", 20 + i);

UpdateQuery updateQuery2 = UpdateQuery.builder(String.valueOf(i))

.withDocument(document2)

.build();

updateQueryList.add(updateQuery2);

}

elasticsearchRestTemplate.bulkUpdate(updateQueryList, IndexCoordinates.of("user"));

}

@Test

void example() {

// 获取单条数据

UserDO userDO = elasticsearchRestTemplate.get("1", UserDO.class, IndexCoordinates.of("user"));

// 删除单条

elasticsearchRestTemplate.delete("1", IndexCoordinates.of("user"));

System.out.println(userDO);

// 条件删除

NativeSearchQuery nativeSearchQuery = new NativeSearchQueryBuilder()

.withQuery(QueryBuilders.termQuery("name", "张三"))

.build();

elasticsearchRestTemplate.delete(nativeSearchQuery, UserDO.class, IndexCoordinates.of("user"));

}

@Test

void deleteDocumentAll() {

NativeSearchQuery nativeSearchQuery = new NativeSearchQueryBuilder()

.withQuery(QueryBuilders.matchAllQuery())

.build();

elasticsearchRestTemplate.delete(nativeSearchQuery, UserDO.class, IndexCoordinates.of("user"));

}

@Test

void test2() {

// 排序模糊查找

NativeSearchQuery searchQuery = new NativeSearchQueryBuilder()

.withSort(SortBuilders.fieldSort("age").order(SortOrder.ASC))

.withQuery(QueryBuilders.fuzzyQuery("name", "张三"))

.build();

SearchHits<UserDO> hits = elasticsearchRestTemplate.search(searchQuery, UserDO.class, IndexCoordinates.of("user"));

System.out.println(hits);

if (!CollectionUtils.isEmpty(hits.getSearchHits())) {

List<UserDO> users = hits.getSearchHits().stream().map(SearchHit::getContent).collect(Collectors.toList());

users.forEach(item -> {

System.out.println(item);

});

}

}

@Test

void test3() {

// 多条件查找,分页

NativeSearchQuery searchQuery = new NativeSearchQueryBuilder()

.withSort(SortBuilders.fieldSort("age").order(SortOrder.ASC))

.withQuery(QueryBuilders.boolQuery()

.must(QueryBuilders.fuzzyQuery("address", "重庆"))

.must(QueryBuilders.rangeQuery("age").gte(20)))

.withPageable(PageRequest.of(1, 5))

.build();

SearchHits<UserDO> hits = elasticsearchRestTemplate.search(searchQuery, UserDO.class, IndexCoordinates.of("user"));

if (!CollectionUtils.isEmpty(hits.getSearchHits())) {

List<UserDO> users = hits.getSearchHits().stream().map(SearchHit::getContent).collect(Collectors.toList());

users.forEach(item -> {

System.out.println(item);

});

}

}

@Test

void test4() {

// 多条件查找

BoolQueryBuilder queryBuilder = QueryBuilders.boolQuery()

.filter(QueryBuilders.rangeQuery("age").from(0).to(30))

.filter(QueryBuilders.termQuery("address.keyword", "重庆"))

.filter(QueryBuilders.fuzzyQuery("name", "李四"));

NativeSearchQuery build = new NativeSearchQueryBuilder()

.withQuery(queryBuilder)

.withPageable(PageRequest.of(0, 5))

.build();

SearchHits<UserDO> hits = elasticsearchRestTemplate.search(build, UserDO.class, IndexCoordinates.of("user"));

if (!CollectionUtils.isEmpty(hits.getSearchHits())) {

List<UserDO> users = hits.getSearchHits().stream().map(SearchHit::getContent).collect(Collectors.toList());

users.forEach(item -> {

System.out.println(item);

});

}

}

@Test

void simple() {

// 简单查询

NativeSearchQueryBuilder query = new NativeSearchQueryBuilder();

BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery();

boolQueryBuilder.must(QueryBuilders.matchQuery("name", "张三"));

boolQueryBuilder.must(QueryBuilders.matchQuery("address", "重庆"));

query.withQuery(boolQueryBuilder);

query.withPageable(PageRequest.of(0, 5));

query.withSort(SortBuilders.fieldSort("age").order(SortOrder.DESC));

query.withCollapseField("name.keyword"); // 去重

SearchHits<UserDO> searchHits = elasticsearchRestTemplate.search(query.build(), UserDO.class);

List<UserDO> users = new ArrayList<>();

for (SearchHit<UserDO> searchHit : searchHits) {

System.out.println(searchHit.getContent());

users.add(searchHit.getContent());

}

}

@Test

void simple2() {

// 聚合,根据每个name,查询address包含重庆计数 -> 张三:1 李四:2

NativeSearchQueryBuilder query = new NativeSearchQueryBuilder();

BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery();

boolQueryBuilder.must(QueryBuilders.matchQuery("address", "重庆"));

query.withQuery(boolQueryBuilder);

query.withPageable(PageRequest.of(0, 5));

query.withSort(SortBuilders.fieldSort("age").order(SortOrder.DESC));

// 作为聚合的字段不能是text类型。所以,author的mapping要有keyword,且通过author.keyword聚合

query.addAggregation(AggregationBuilders.terms("per_count").field("name.keyword"));

// 不需要获取source结果集,在aggregation里可以获取结果

query.withSourceFilter(new FetchSourceFilterBuilder().build());

SearchHits<UserDO> searchHits = elasticsearchRestTemplate.search(query.build(), UserDO.class);

Aggregations aggregations = searchHits.getAggregations();

ParsedStringTerms per_count = aggregations.get("per_count");

Map<String, Long> map = new HashMap<>();

for (Terms.Bucket bucket : per_count.getBuckets()) {

map.put(bucket.getKeyAsString(), bucket.getDocCount());

}

map.forEach((key, value) -> {

System.out.println("key:" + key + " value:" + value);

});

}

@Test

void simple3() {

// 嵌套聚合,根据每个name,查询address包含重庆计数 以及最大年龄 -> 张三:count:1,age:22 李四:count:2,age:23

NativeSearchQueryBuilder query = new NativeSearchQueryBuilder();

BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery();

boolQueryBuilder.must(QueryBuilders.matchQuery("address", "重庆"));

query.withQuery(boolQueryBuilder);

query.withPageable(PageRequest.of(0, 5));

query.withSort(SortBuilders.fieldSort("age").order(SortOrder.DESC));

// 作为聚合的字段不能是text类型。所以,author的mapping要有keyword,且通过author.keyword聚合

query.addAggregation(AggregationBuilders.terms("per_count").field("name.keyword")

.subAggregation(AggregationBuilders.max("max_age").field("age"))

);

// 不需要获取source结果集,在aggregation里可以获取结果

query.withSourceFilter(new FetchSourceFilterBuilder().build());

SearchHits<UserDO> searchHits = elasticsearchRestTemplate.search(query.build(), UserDO.class);

Aggregations aggregations = searchHits.getAggregations();

ParsedStringTerms per_count = aggregations.get("per_count");

Map<String, Map<String, Object>> map = new HashMap<>();

for (Terms.Bucket bucket : per_count.getBuckets()) {

Map<String, Object> objectMap = new HashMap<>();

objectMap.put("docCount", bucket.getDocCount());

ParsedMax parsedMax = bucket.getAggregations().get("max_age");

objectMap.put("maxAge", parsedMax.getValueAsString());

map.put(bucket.getKeyAsString(), objectMap);

}

map.forEach((key, value) -> {

System.out.println("key:" + key + " count:" + value.get("docCount") + " maxAge:" + value.get("maxAge"));

});

}

}